Review Session

Review Session

- Date: Fri, Dec 15 at 12:00

- Duration: 2.5 hours

- Location: FIP 204

This is a cumulative exam.

Format

- On paper - handwritten.

- a mix of multiple choice, and short/long answer

- closed-book, closed-technology

- i.e. computers and calculators are not permitted

- 1 doubled-sided 8.5x11 handwritten formula sheet is allowed

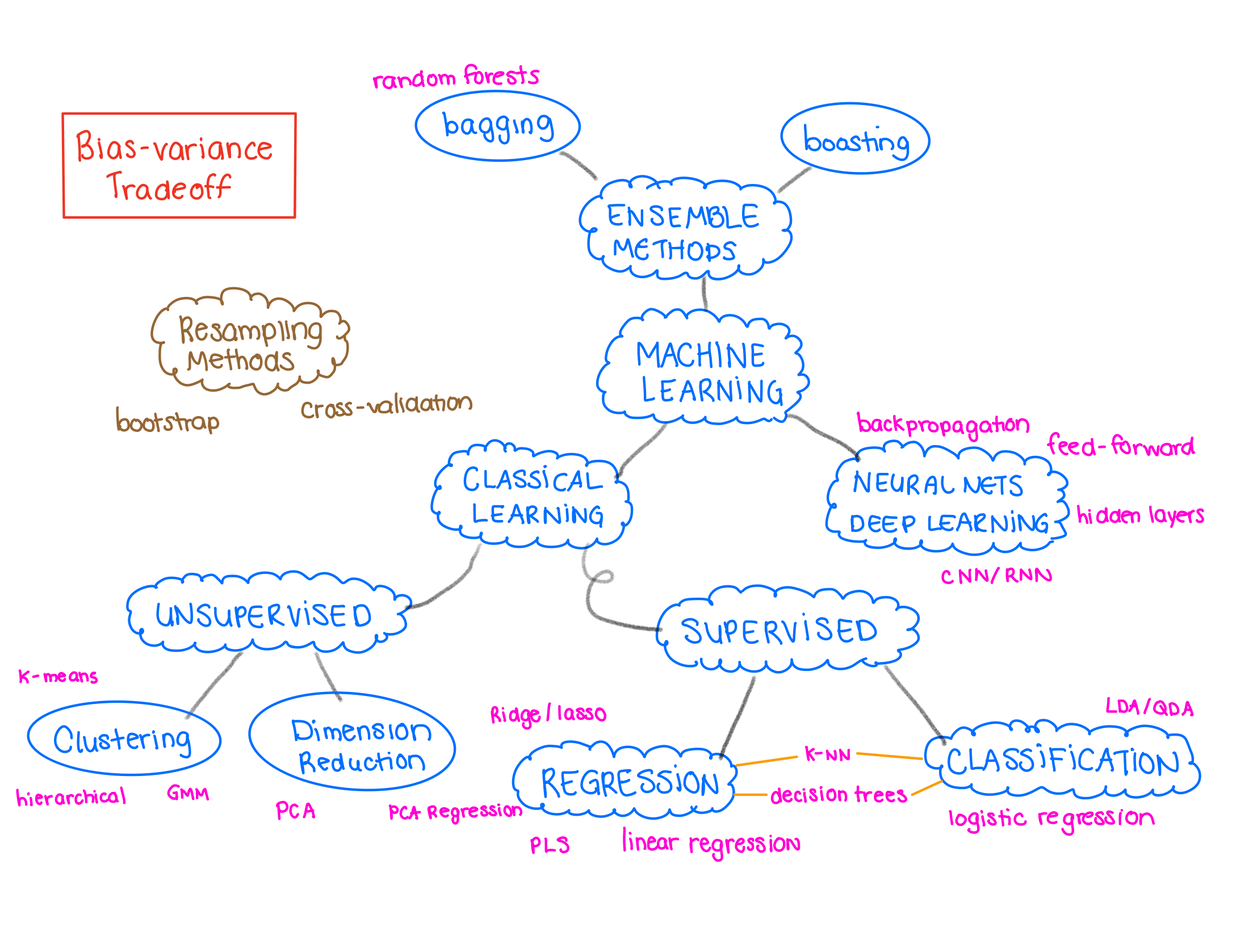

Overview of the course

In this course, we covered a wide range of topics that provide a comprehensive understanding of various statistical and machine learning techniques. Here’s a brief summary of the key topics covered in each lecture …

Introduction

Introduction to R and RStudio: [slides][lab1]

- Familiarization with R and RStudio for statistical analysis.

- R basics, syntax, libraries and installations

- basic data manipulation, summarization, and visualization

- Produce an HTML output from an Rmd file

Notation and Terminology:

X = predictors, Y = response, \(n\) = number of observations, \(p\) = number of predictors

Supervised (X’s and Y’s) vs unsupervised (no Y)

Inference vs. prediction

Goal of prediction: is to estimate an outcome or value based on historical data, patterns, and relationships present in the input features. The fundamental idea is to use a model trained on existing data to make informed predictions about unseen or future data.

Goal of inference:

To gain insights about the underlying data generation process, relationships between variables, or the impact of certain factors.

To draw conclusions or make statements about the population or data generating mechanism.

Supplementary Reading: ISLR Chapter 1.

General model \[Y = f(X) + \epsilon\] Statistical learning is concerned with estimating \(f\). Estimates of \(f\) are denoted by \(\hat f\), and \(\hat y\) will represent the resulting prediction for \(y\).

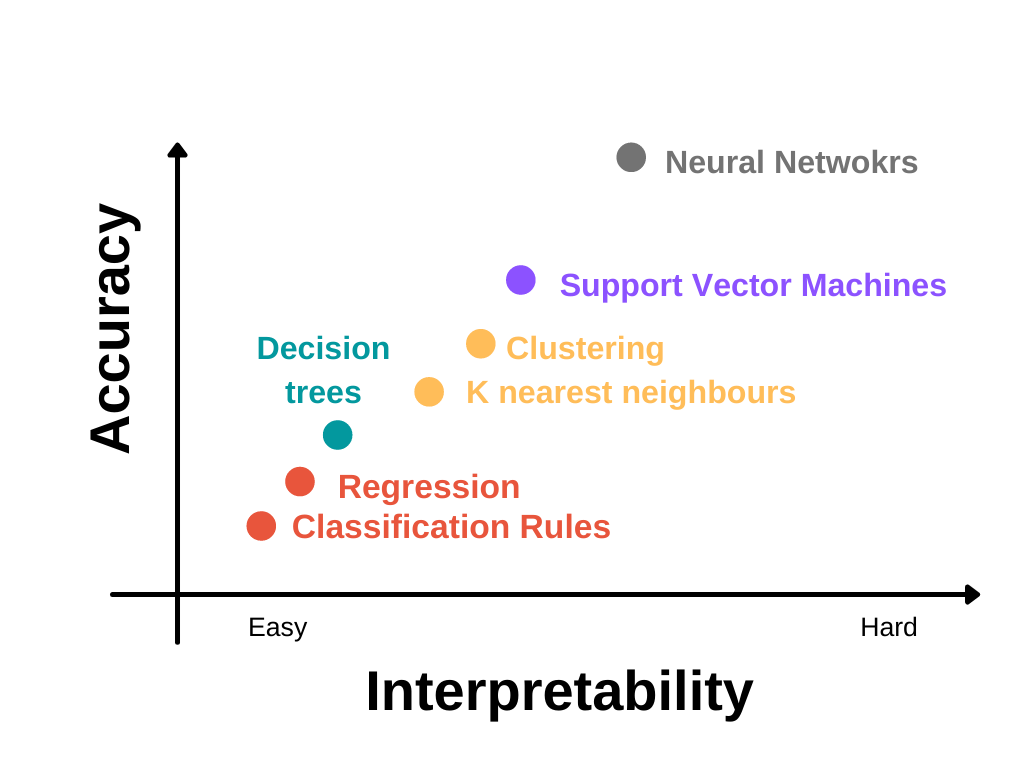

Accuracy vs. Interpretability

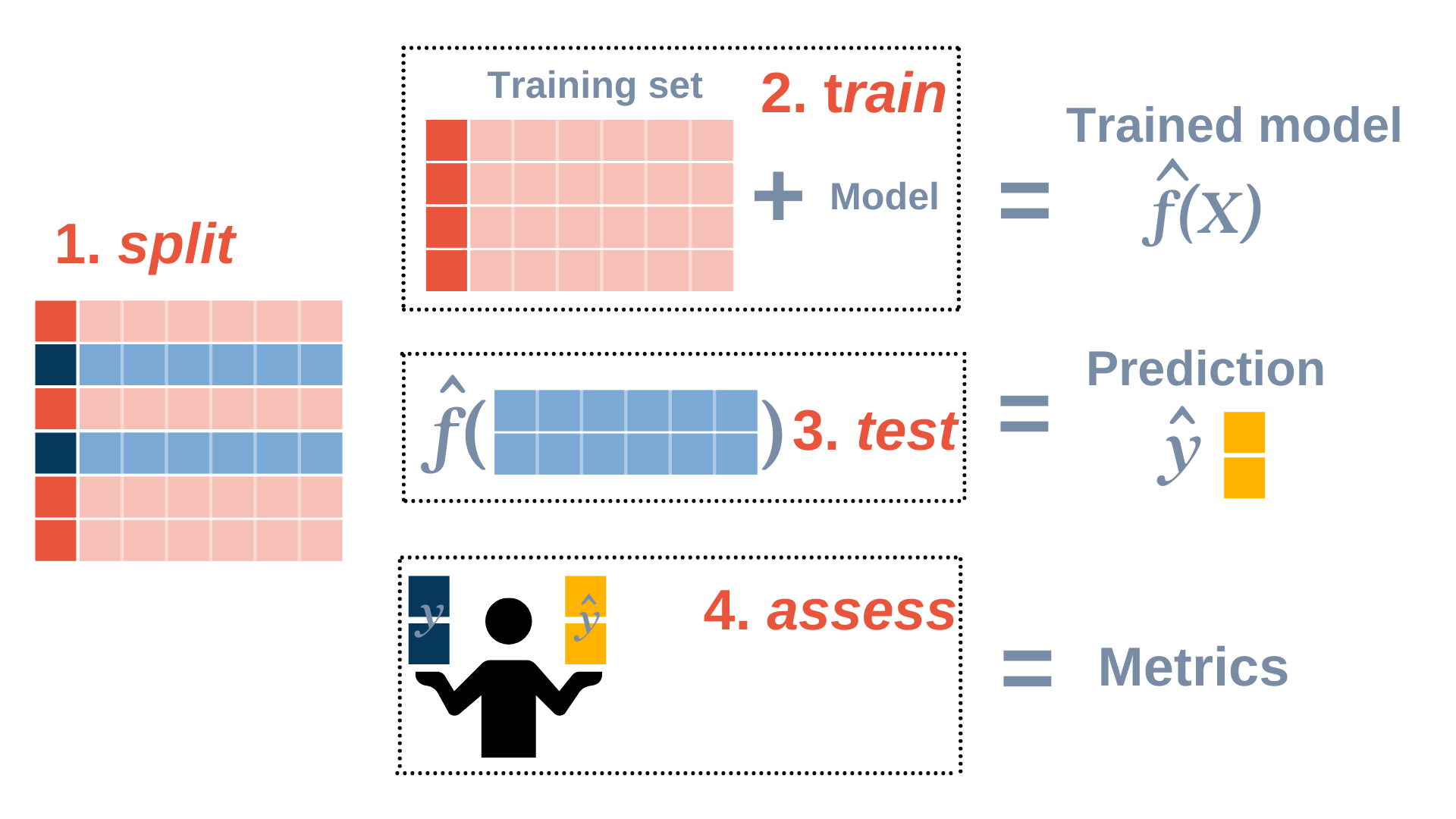

Supervised Learning

General workflow:

Key Concepts

When to scale? (pretty much any distance-based method).

Resampling methods

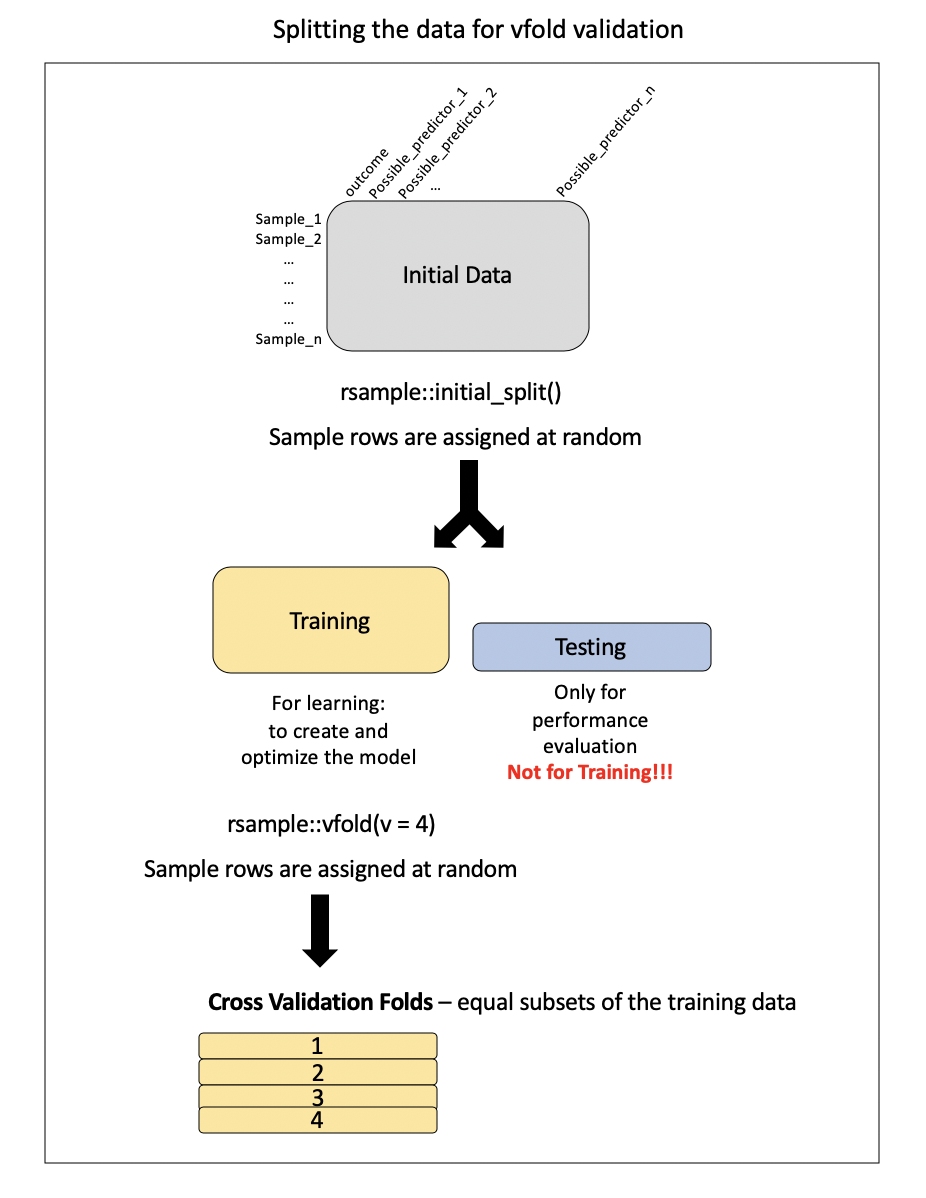

Cross-validation

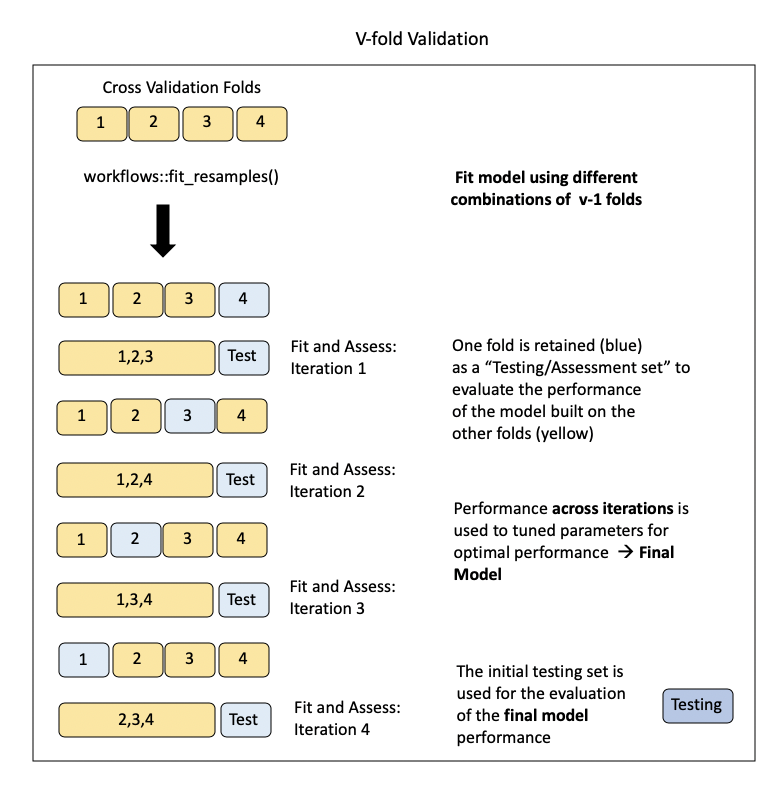

Training may involve model tuning. Model tuning with cross-validation is a crucial step in the machine learning pipeline to optimize the performance of a model by adjusting hyperparameters. Hyperparameters are settings that are not learned from the data but need to be specified before the training process. Cross-validation is used to assess different hyperparameter options and choose the value with the best generalization performance. Here’s how it works:

Hyperparameter Selection:

Choose a set of candidate hyperparameters for your machine learning model. These might include parameters like the depth of a decision tree, \(k\) = the number of nearest neighbours to be used in KNN, the regularization parameter \(\alpha\) in LASSO/Ridge regression, …

Cross-Validation Setup:

Model Training and Evaluation:

For each candidate value for you hyperparameter:

Train the model on the training subset with the chosen hyperparameters. See Figure 2.

Evaluate the model’s performance on the validation subset using a predefined metric (e.g., accuracy, precision, recall). See Figure 2.

Compute the average performance across all folds to get a more reliable estimate of the model’s generalization performance with the current hyperparameter settings.

Final Model Selection:

- Refit the model with the choosen hyperparameter value that resulted in the best average performance across all folds.

Final Model Assessment

- For a more robust assessment, perform a final evaluation on a separate test set that wasn’t used during hyperparameter tuning.

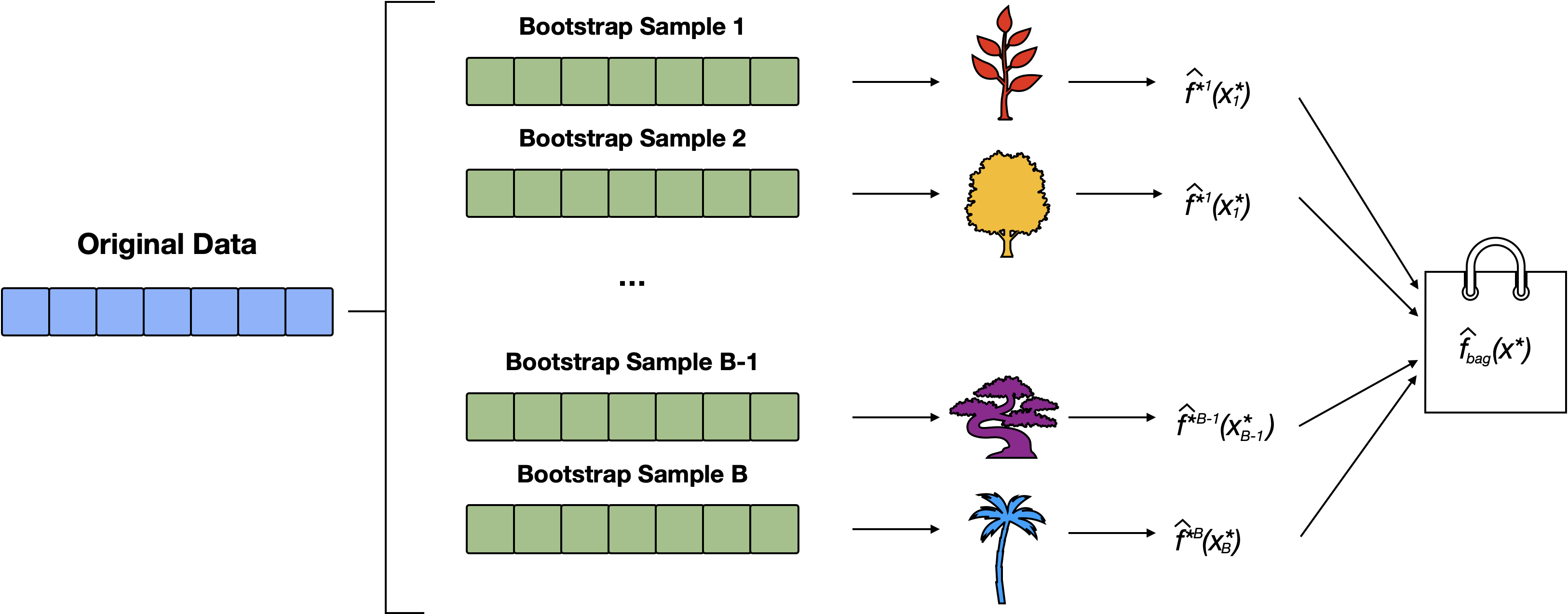

Bootstrap

Bootstrap resampling is a statistical technique that involves generating multiple subsamples (with replacement) from a dataset to assess the variability of a statistical estimator or to improve the robustness of a machine learning model. Here’s how we saw bootstrap in use:

Estimation of standard errors and confidence intervals: bootstrap resampling can be used to estimate stanadard errors and confidence intervals around model parameters. By repeatedly resampling the dataset and fitting the model, you can assess the variability of the estimated parameters. Know how to read/write a program for doing this.

Bagging (Bootstrap Aggregating): the bootstrap can be employed in ensemble methods like bagging decisions trees. More generally bagging involves training multiple instances of the same learning algorithm on different bootstrap samples of the training data and combining their predictions to reduce variance and improve generalization.

Random Forests: (an extension of Bagging, where each tree is constructed using a random subset of features at each split, introducing an additional layer of randomness) used bootstrap sampling to create multiple decision trees. Each tree is trained on a bootstrap sample, and their predictions are combined through voting or averaging.

Feature Importance: In bagging and random forests the bootstrap inherently provides an estimate the importance of features in a model. The importance is infered by assessing how the model performance changes with and without each feature.

Out-of-bag error estimates: In ensemble methods like Random Forests and Bagging, out-of-bag (OOB) estimates of error provide a way to assess the model’s performance without the need for a separate validation set or cross-validation. Here’s how it works:

During the training of each tree in the ensemble, a bootstrap sample (a random sample with replacement) is drawn from the original dataset; the instances from the training set that are not sampled are called the “out-of-bag” samples.

For each observation in the training set we predict its target variable using only the trees for which it was an out-of-bag sample and calculate the error (e.g., classification error or mean squared error).

Bias-Variance tradeoff

The bias-variance tradeoff suggests that as you decrease bias (by using a more complex model), you often increase variance, and vice versa. The challenge is to find the right level of model complexity that minimizes the total error on unseen data. The complexity of a model is often controlled by hyperparameters or features such as the degree of a polynomial, the depth of a decision tree, or the number of parameters in a neural network.

\[ \text{Total Error} = \text{Bias}^2 + \text{Variance} + \text{Irreducible Error} \]

The U-shaped test MSE (generalization error in this visualization) is a fundamental property of statistical learning that holds regardless of the particular dataset and statistical method being used.

Bias (Model Error):

Definition: Bias is the error introduced by approximating a real-world problem with a simplified model. It represents the difference between the predicted values of the model and the true values.

Effect: High bias models tend to oversimplify the underlying patterns in the data, leading to systematic errors.

Relation to Model Complexity: Bias is often associated with models that are too simple or have insufficient capacity to capture the complexity of the underlying relationship in the data.

Variance (Model Sensitivity):

Definition: Variance is the error introduced by the model’s sensitivity to the fluctuations in the training data. It measures how much the model’s predictions vary when trained on different subsets of the data.

Effect: High variance models fit the training data too closely and may not generalize well to new, unseen data.

Relation to Model Complexity: Variance is often associated with models that are too complex, capturing noise or specific patterns in the training data that do not generalize.

Irreducible Error:

Definition: Irreducible error is the error that cannot be reduced by any model. It stems from the inherent randomness or noise in the data, and it represents the minimum error that any predictive model will have.

Effect: No matter how accurate a model is, there will always be some level of error that cannot be eliminated.

Relation to Total Error: Irreducible error sets a lower limit on the total error that any model can achieve. It is the baseline level of error that even an optimal model cannot overcome.

Total Error:

Definition: Total error is the sum of the squared bias, variance, and irreducible error.

Relationship: The bias-variance tradeoff illustrates that as you reduce bias, variance tends to increase, and vice versa. The total error of a model is the sum of the squared bias, variance, and irreducible error.

\[ \text{Total Error} = \text{Bias}^2 + \text{Variance} + \text{Irreducible Error} \]

The goal is to find a model complexity that minimizes the total error by balancing bias and variance. Achieving zero bias and zero variance is typically not possible, and the tradeoff involves finding the right level of complexity.

Underfitting vs overfitting

Underfitting (High Bias): Occurs when a model is too simple to capture the underlying patterns in the data. It performs poorly on both the training and new data.

Overfitting (High Variance): Occurs when a model is too complex and fits the training data too closely. It performs well on the training data but poorly on new, unseen data.

Method that leverages the principles include: LASSO and Ridge Regression, random forest and bagging.

Regression Models

Regression methods in machine learning are a set of techniques used to model and analyze the relationships between a dependent variable (AKA the target or response variable) and one or more independent variables (AKA features or predictors). The goal is to be able to predict the value of the dependent variable based on the values of the independent variables. Regression models are widely used for both prediction and understanding the underlying relationships within the data. Here are the regression methods we talked about in the course:

Linear Regression:

Model: Assumes a linear relationship between the input features and the output. The model is represented as

\[\begin{equation}Y = \beta_0 + \beta_1 X_{1} + \beta_2 X_{2} + \dots + \beta_n X_n \epsilon\end{equation}\]- \(Y\) is the response variable at \(i\)

- \(X_{j}\) is the \(j^{th}\) predictor variable

- \(\beta_0\) is the intercept (unknown)

- \(\beta_j\) are the regression coefficients (unknown)

- \(\epsilon\) is the error, assumed \(\epsilon_i \sim N(0, \sigma^2)\).

Objective: Minimizes the sum of squared differences between predicted and actual values.

Use Cases: Suitable for problems where the relationship between variables is approximately linear.

Assumptions:

Linearity: The relationship between the independent and dependent variables is assumed to be (approximately) linear..

Independence of Errors: The errors (residuals) in the model should be independent of each other.

Homoscedasticity: the variance of the residuals is constant across all levels of the predictors.

The last 2 assumptions can be summarized as \(\epsilon \sim N(0, \sigma)\)

We saw how we could include:

- interaction terms (e.g. \(X_1*X_2\)) to account for scenarios where the impact of one predictor on the response depends on the level or condition of another predictor.

- categorical predictors (by creating dummy variables)

- higher order terms e.g. (\(X_1^3\)) as a way of introducing curvature to capture more complex patterns in the data (polynomial regression)

For feature selection we could use:

- forward/backwards step-wise selection

- choose the model with the highest adjusted \(R^2\) among some candidate of models

- cross-fold validation (we never did this but hopefully you can visualize how that makes sense)

- use importance features from tree-based models

Be sure to know how to:

- write down the fitted equation of the line(s) from R output

- calculate predictions using R code or by a mathematical equation (final calculation not possible since you are not permitted a calculator)

- read and interpret p-values

- read and interpret R-squared values

lm()functionsummary(lmoutput)

Diagnostic Plots

Residuals vs Fitted: checks for linearity. A “good” plot will have a red horizontal line, without distinct patterns.

Normal Q-Q: checks if residuals are normally distributed. It’s “good” if points follow the straight dashed line.

Scale-Location: checks the equal variance of the residuals. “Good” to see horizontal line with equally spread points.

Residuals vs Leverage: identifies influential points.

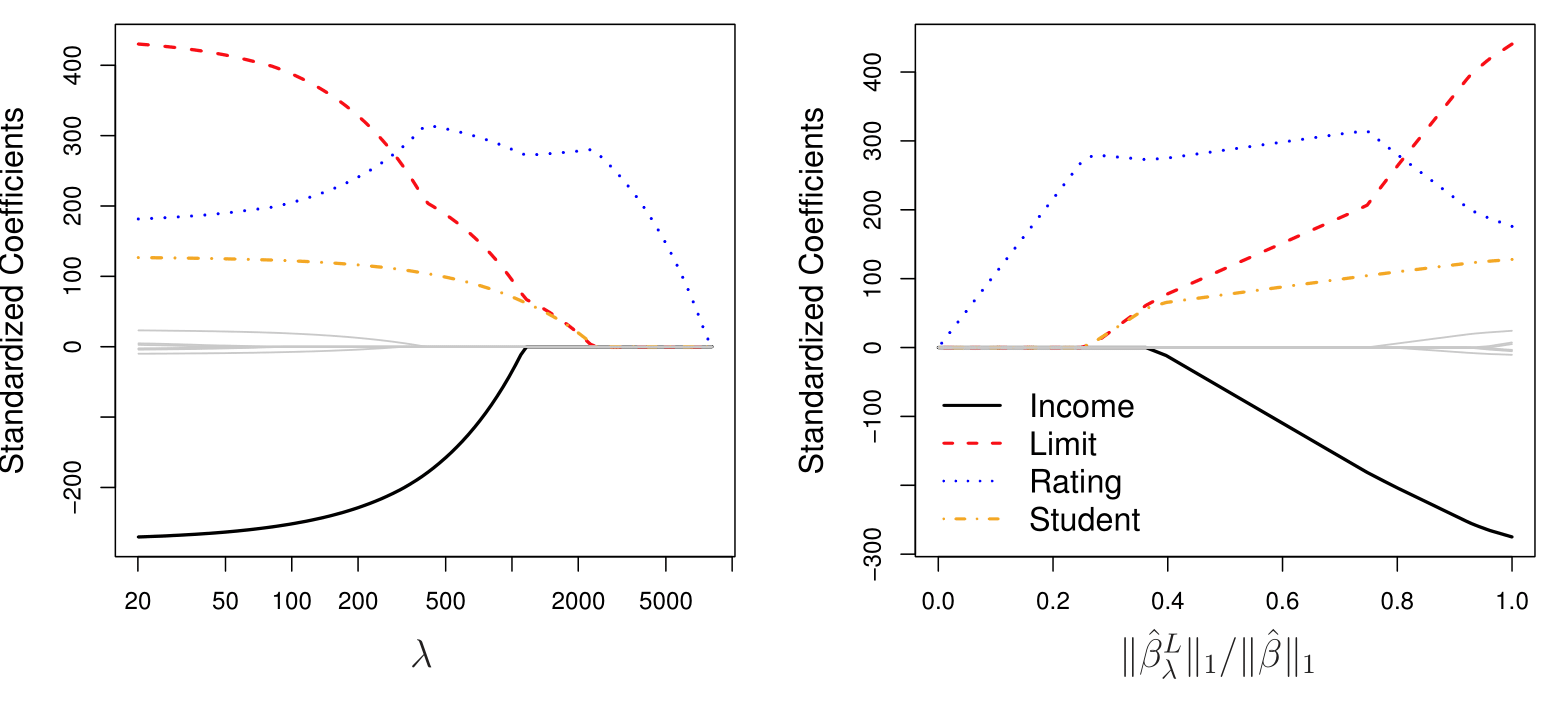

Regularization techniques

Ridge Regression and Lasso (Least Absolute Shrinkage and Selection Operator) are regularization techniques used in linear regression to prevent overfitting and handle multicollinearity. Both methods add a regularization term to the linear regression objective function, influencing the model coefficients during training.

Ridge Regression (L2 Regularization):

Model: A regularized version of linear regression that adds a penalty term proportional to the square of the magnitude of the coefficients.

Objective: Minimizes the sum of squared differences with the addition of a regularization term.

\[ \text{Minimize } J(\beta) = \sum_{i=1}^{N} (y_i - \beta_0 - \sum_{j=1}^{p} x_{ij}\beta_j)^2 + \lambda \sum_{j=1}^{p} \beta_j^2 \]

Regularization Term: \(\lambda \sum_{j=1}^{p} \beta_j^2\)

Purpose:

Promotes sparsity by encouraging exact zero coefficients.

Effective for feature selection, automatically setting some coefficients to zero.

Effect on Coefficients:

- Can lead to exact zero coefficients, effectively removing irrelevant features.

Hyperparameter: \(\lambda\) controls the strength of regularization.

Use Cases: Helps prevent overfitting, particularly when dealing with multicollinearity among input features.

Lasso Regression (L1 Regularization):

Model: Similar to Ridge regression but adds a penalty term proportional to the absolute values of the coefficients.

Objective: Minimizes the sum of squared differences with the addition of a regularization term.

\[ \text{Minimize } J(\beta) = \sum_{i=1}^{N} (y_i - \beta_0 - \sum_{j=1}^{p} x_{ij}\beta_j)^2 + \lambda \sum_{j=1}^{p} |\beta_j| \]

Regularization Term: \(\lambda \sum_{j=1}^{p} |\beta_j|\)

Purpose:

Promotes sparsity by encouraging exact zero coefficients.

Effective for feature selection, automatically setting some coefficients to zero.

Effect on Coefficients:

- Can lead to exact zero coefficients, effectively removing irrelevant features.

Hyperparameter: \(\lambda\) controls the strength of regularization.

Use Cases: Useful for feature selection as it tends to drive some coefficients to exactly zero.

Key difference: Ridge Regression shrinks coefficients towards zero whereas Lasso can lead to exact zero coefficients, performing feature selection.

PCA Regression (Principal Component Regression):

- Model: Uses principal component analysis (PCA) to transform the original input features into a set of orthogonal components. A regression model is then built on the principal components.

- Objective: Reduces dimensionality and multicollinearity in the data.

- Use Cases: Useful when dealing with a high number of correlated features, aiming to capture most of the variance with fewer components.

Partial Least Squares (PLS) Regression:

- Model: Like PCA, PLS also transforms the input features but does so in relation to the output variable. It iteratively constructs latent variables that maximize the covariance between the input features and the target variable.

- Objective: Simultaneously achieves dimensionality reduction and predicts the target variable.

- Use Cases: Effective in situations with high-dimensional data and when there is a strong relationship between predictors and the response.

You should know how to read the plots that show how the coefficients change across a range of regularization strengths.

Credit data set are shown as a function of λ and ∥βˆλL∥1/∥βˆ∥1.- R function:

glmnetwithalpha = 0(lasso)alpha = 1(ridge),cv.glmnetto decide on the appropriate amount of shrikage

Assessing Regression Models:

Mean Squared Error (MSE) and Training vs. Testing MSE.

\[ MSE = \frac{1}{n} \sum_{i=1}^{n} (y_i - \hat{y}_i)^2 \]

we typically care more about the MSE calculated on the test set. Some general trends:

As model flexibility increases, the training MSE will decrease, but the test MSE may not.

When a method yields a small training MSE but a large test MSE, is is said to be overfitting the data.

We almost always expect the training MSE to be smaller than the test MSE.

The training MSE declines monotonically as flexibility increases.

Classification Models

Classification methods in machine learning are algorithms designed to assign predefined labels or categories to input data based on their features. The goal is to build a model that can learn and generalize patterns from labeled training data to make accurate predictions on new, unseen data. Here are the classification methods we talked about in this course:

Logistic Regression:

- Model: Despite its name, logistic regression is used for binary classification. It models the probability that an instance belongs to a particular class using the logistic function.

- Objective: Maximizes the likelihood of the observed data.

- Use Cases: Binary classification problems where the relationship between features and the log-odds of the response variable is assumed to be linear.

- Differences from Linear Regression: MLE rather than OLS

- Connection with the glm model: logistic regression is a GLM with a logit link function (

family = binomial)

Similar extensions/discussion as said in linear regression. See Midterm 2 Review

Linear Discriminant Analysis (LDA):

- Model: Assumes that the features are normally distributed and have a common covariance matrix for all classes. LDA seeks a linear combination of features that best separates multiple classes.

- Objective: Maximizes the between-class variance relative to the within-class variance.

- Use Cases: Effective when the classes have similar covariance matrices and features are normally distributed.

Quadratic Discriminant Analysis (QDA):

- Model: Relaxation of LDA, allowing each class to have its own covariance matrix. QDA models a quadratic decision boundary.

- Objective: Maximizes the likelihood of the observed data under the assumption of a quadratic decision boundary.

- Use Cases: Useful when the assumption of a common covariance matrix is not appropriate, and the decision boundaries between classes are quadratic.

Be sure to know:

- the assumptions of LDA vs. QDA (and when to use which)

- the derivation of linear boundary

Assessing classification models

A confusion matrix provides a table that summarizes the counts of true positive (TP), true negative (TN), false positive (FP), and false negative (FN) predictions. Metrics Derived from the Confusion Matrix include:

Accuracy: \(\frac{\text{True Positives} + \text{True Negatives}}{\text{Total Population}}\)

Precision (Positive Predictive Value): \(\frac{\text{True Positives}}{\text{True Positives} + \text{False Positives}}\)

Recall (Sensitivity, True Positive Rate): \(\frac{\text{True Positives}}{\text{True Positives} + \text{False Negatives}}\)

F1 Score: \(2 \times \frac{\text{Precision} \times \text{Recall}}{\text{Precision} + \text{Recall}}\)

The Receiver Operating Characteristic (ROC) Curve plots the true positive rate (sensitivity) against the false positive rate for different classification thresholds.

- “Better” ROC curves should hug the top-left corner of the plot, indicating high sensitivity (true positive rate) and low false positive rate. This implies that the model achieves high true positive rates while minimizing false positive rates.

Area Under the Curve (AUC) summarizes the overall performance of the model, with a higher AUC indicating better performance.

A higher AUC indicates better overall performance.

An AUC of 0.5 represents random guessing, while an AUC of 1.0 signifies perfect discrimination.

Regression/Classification models

Tree-based methods

Tree-based methods are used in both regression and classification tasks, making them versatile and widely applicable in machine learning.

Decision Trees:

Model: Builds a tree structure to make predictions based on recursive binary splits of the input features. Each internal node represents a decision, and each leaf node represents the prediction (e.g. value or class label).

Objective (for classification): The goal of a decision tree for classification is to partition the input space into distinct regions, each corresponding to a specific class or category. Maximizes information gain or Gini impurity during tree construction.

Objective (for regression): Minimize the RSS (via recursive binary splitting) at each node.

Use Cases: Versatile for both binary and multiclass classification. Interpretability is a notable advantage. Suitable for problems with complex, non-linear relationships. Prone to overfitting.

Pruning: technique used to prevent overfitting by removing or merging nodes.

R functions:

tree,cv.tree,prune.treefrom the tree packageKnow how to read the trees and make predictions from them

See Midterm 2 Review for more

Next we talked about three extension to these simple trees (which typically do not perform well)…

Bagging (Bootstrap Aggregating):

Objective: Reduce variance and overfitting by training multiple instances of the same learning algorithm on different bootstrap samples (random subsets with replacement) of the training data.

Steps:

- Create multiple subsets of the training data by sampling with replacement.

- Train a base model (e.g., decision tree) independently on each subset.

- Combine the predictions through averaging (regression) or voting (classification).

Benefits:

Increased robustness and stability over single Classification decision tree.

Improved generalization by reducing variance.

Feature importance analysis.

OOB estimates of error

R functions:

randomForest(..., mtry =p) from the randomForest package (wherepis the number of predictors used in your model).how to read variance importance plots

obtaining OOB errors

Random Forests

Objective: Extend bagging by introducing additional randomness through random feature selection at each split during the construction of decision trees.

Steps:

- For each split in a tree, consider only a random subset of features.

- Train multiple trees independently using different bootstrap samples and feature subsets.

- Combine predictions through averaging (regression) or voting (classification).

Benefits:

- Further reduction of overfitting compared to bagging.

- Improved generalization and accuracy.

- Feature importance analysis

- OOB estimates of error

R functions:

randomForest(..., mtry) from the randomForest packagehow to read variance importance plots

obtaining OOB errors

Boosting:

Objective: Build a strong predictive model by sequentially training weak learners (typically shallow trees), with each new learner focusing on correcting the errors of the ensemble so far.

Steps:

- Train a base model on the entire dataset.

- Assign higher weights to misclassified instances.

- Train the next model with adjusted weights, emphasizing the previously misclassified instances.

- Iterate until a predefined number of models or a specified performance is reached.

- Combine predictions through weighted sum.

Benefits:

Improved accuracy over time.

Adaptability to complex patterns and hard-to-classify instances.

Effective handling of imbalanced data.

Disadvantanges:

- no more OOB estimates or variable importance

R functions:

gbm(formula, distribution="bernoulli", n.trees, interaction.depth =\(d\), shrinkage =\(\lambda\))from the gmb package

General expectation of performance:

\[\text{Single Tree} < \text{Bagging} < \text{RF} < \text{Boosting}\]

K-Nearest Neighbors (KNN) Regression:

- Model: Predicts the output/class based on the average/majority vote of the k-nearest neighbors in the feature space.

- Objective: Captures local patterns in the data.

- Use Cases: Suitable for problems where local relationships are important and the data has a clear structure. Simple and intuitive, suitable for both binary and multiclass classification.

Neural Networks

Basic Architecture:

Neural networks are computational models inspired by the structure and function of biological neural networks.

They consist of interconnected nodes (neurons) organized into layers: an input layer, one or more hidden layers, and an output layer.

Activation Function:

- Each node in a neural network applies an activation function to its input. Common activation functions include sigmoid, hyperbolic tangent (tanh), and rectified linear unit (ReLU).

Weights and Biases:

The connections between nodes are characterized by weights, which are adjusted during training to capture the relationships in the data.

Biases are additional parameters that allow the model to account for shifts in the data.

Feedforward and Backpropagation:

During the training process, input data are fed forward through the network, and predictions are compared to the actual outcomes.

Backpropagation involves adjusting weights and biases based on the error to minimize the difference between predictions and actual outcomes.

Loss Function:

- A loss function quantifies the difference between predicted and actual values. During training, the goal is to minimize this loss.

Extension to the single-layer neural network we looked at in class:

Deep learning extends neural networks to include multiple hidden layers, leading to the term “deep neural networks.”

Convolutional Neural Networks (CNNs): Specialized architectures for image-related tasks. CNNs use convolutional layers to automatically learn spatial hierarchies of features.

Recurrent Neural Networks (RNNs): Suited for sequential data. RNNs maintain a hidden state that captures temporal dependencies in sequences.

Unsupervised Learning

Clustering

Clustering methods in machine learning are techniques that group similar data points into distinct clusters or groups based on certain criteria. These methods aim to discover hidden patterns or structures in the data without prior knowledge of the class labels. Here are the clustering methods we talked about:

K-Means Clustering:

Method: Divides the dataset into k clusters by assigning data points to the cluster whose centroid (mean) is closest to them.

Objective: Minimizes the within-cluster sum of squares.

Use Cases: Effective for spherical clusters with similar sizes.

See Midterm 2 Review

Agglomerative Hierarchical Clustering:

Method: Builds a hierarchy of clusters, either by starting with individual data points and merging them.

Objective: Creates a dendrogram to represent the hierarchy.

Use Cases: Useful when there is a hierarchical structure in the data.

See Midterm 2 Review

Gaussian Mixture Models (GMM):

- Method: Models data as a mixture of several Gaussian distributions, allowing for flexibility in cluster shapes.

- Objective: Maximizes the likelihood of the observed data under the assumption of a Gaussian mixture.

- Use Cases: Applicable when clusters have different shapes and are not necessarily spherical.

Practice questions to come!

Distance Measures:

K-means and hierarchical clustering relies on Euclidean distances. We also looked at other distance measures, including: Manhattan, Mahalanobis, Matching Binary, Asymmetric Binary, and Gower’s Distance.

- know the basic differences between these, and when you can use them (e.g. which can be used with categorical predictors).

Dimensionality Reduction

Reduces the number of features or variables in the dataset while preserving relevant information. This is particularly useful for visualizing high-dimensional data and improving computational efficiency. The method we looked at for achieving this was PCA (there are others)

Principal Component Analysis (PCA)

Principal Component Analysis (PCA) is a dimensionality reduction technique widely used in machine learning and data analysis. It aims to transform a dataset with potentially correlated features into a new set of uncorrelated features, called principal components, that capture the maximum variance in the data. PCA is particularly useful for visualizing high-dimensional data, reducing computational complexity, and identifying important patterns.

Steps in PCA:

Standardize the features to have zero mean and unit variance. This ensures that all features contribute equally to the covariance matrix.

Compute Covariance Matrix from the standardized data. The covariance matrix represents the relationships between different features, indicating how they vary together.

Perform Eigenvalue Decomposition on the covariance matrix to obtain eigenvectors and eigenvalues.

Eigenvectors: Represent the directions of maximum variance in the data.

Eigenvalues: Indicate the magnitude of variance along the corresponding eigenvector.

Interpret the Principal Components:

Principal components are the eigenvectors obtained from the eigenvalue decomposition. They form a new set of orthogonal axes that capture the most significant variability in the data.

The first principal component corresponds to the direction of maximum variance, the second to the second-highest variance, and so on, …

Select Principal Components: Choose a subset of the eigenvectors (principal components) based on the desired amount of retained variance, or the Kaiser criterion, or the elbow method.

Transform Data: Project the original data onto the selected principal components to obtain the reduced-dimensional representation. If the number of components \(M\) is less than the original number of predictors in the data \(p\), this results in a transformed dataset with reduced dimensionality (otherwise it represents a rotation of the data).

Use Cases:

Dimensionality Reduction:

- PCA is often used to reduce the number of features in a dataset while retaining most of the variability.

Visualization:

- Visualizing high-dimensional data in a lower-dimensional space is a common application of PCA, especially in scatter plots.

Noise Reduction:

- By focusing on the principal components with the highest variance, PCA can help reduce the impact of noise in the data.

Feature Engineering:

- PCA-derived features can be used as input for downstream machine learning models.

Identifying Important Features:

- The contribution of each original feature to principal components provides insights into the importance of different features.

Know how to:

pick the number of appropriate PCs (e.g. prop of variance explained, read an elbow-plot, kaiser criterion)

Assess what a principal component represents

interpret a bi-plot