Data 311: Machine Learning

Lecture 5: Regression Models Part 2

University of British Columbia Okanagan

Outline

We are in the supervised machine learning setting, we will investigate:

- Interaction (linear regression model)

- Categorical Predictors (linear regression model)

- Interaction with Mixed Predictors (linear regression model)

- Polynomial Regression

- KNN Regression (non-parametric approach)

READ ME

Include something about the AIC for model selection?

Example: Clock Auction

The data give the selling price,

Priceat auction of 32 antique grandfather clocks.Also recorded is the age of the clock (

Age) and the number of people who made a bid (Bidders).This data can be downloadable here

Note: this data is tab delimited; values within the dataset are separated by tab characters. One way to read this into R is to use read.delim().

Clock Auction Data

3D Scatter plot

Correlation

correlation matrix (see ?cor)

Age Bidders Price

Age 1.0000000 -0.2537491 0.7302332

Bidders -0.2537491 1.0000000 0.3946404

Price 0.7302332 0.3946404 1.0000000It is reassuring that Price seems to be linearly related to the Age and Bidders. Notice that Age and Bidders have a slight negative correlation.

Pairwise scatterplot

Another way that you could investigate correlation is through a pairwise scatterplot, obtained using plot()

SLR with Age

...

Call:

lm(formula = Price ~ Age)

Coefficients:

Estimate Std. Error t value Pr(>|t|)

(Intercept) -191.66 263.89 -0.726 0.473

Age 10.48 1.79 5.854 2.1e-06 ***

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

Residual standard error: 273 on 30 degrees of freedom

Multiple R-squared: 0.5332, Adjusted R-squared: 0.5177

F-statistic: 34.27 on 1 and 30 DF, p-value: 2.096e-06

...SLR with Bidders

...

Call:

lm(formula = Price ~ Bidders)

Coefficients:

Estimate Std. Error t value Pr(>|t|)

(Intercept) 806.40 230.68 3.496 0.00149 **

Bidders 54.64 23.23 2.352 0.02540 *

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

Residual standard error: 367.2 on 30 degrees of freedom

Multiple R-squared: 0.1557, Adjusted R-squared: 0.1276

F-statistic: 5.534 on 1 and 30 DF, p-value: 0.0254

...iClicker

iClicker: SLR comparison

Which model explains a higher percentage of the variance in Price?

SLR with Age

SLR with Bidders

Both models explain the same percentage

None of the models explain any variance

Correct answer: A. This model has a righer \(R^2\) and smaller RSE

MLR without Interaction

...

Call:

lm(formula = Price ~ Age + Bidders)

Coefficients:

Estimate Std. Error t value Pr(>|t|)

(Intercept) -1336.7221 173.3561 -7.711 1.67e-08 ***

Age 12.7362 0.9024 14.114 1.60e-14 ***

Bidders 85.8151 8.7058 9.857 9.14e-11 ***

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

Residual standard error: 133.1 on 29 degrees of freedom

Multiple R-squared: 0.8927, Adjusted R-squared: 0.8853

F-statistic: 120.7 on 2 and 29 DF, p-value: 8.769e-15

...iClicker

Fitted MLR model

What is the fitted model?

Price=Age\(\times\) 12.74 +Bidders\(\times\) 85.82Price= -1336.72 +Age\(\times\) 12.74 +Bidders\(\times\) 85.82Price= -1336.72 + 12.74 + 85.82Price= -1336.72 \(\times \beta_0\) +Age\(\times\) 12.74 +Bidders\(\times\) 85.82

Correct answer: B

Interpretation of Coefficients

- \(\beta_1\): For a clock with a given amount of Bidders, an increase of 1 year in the age of the clock is associated with a $12.74 increase in the mean price of the clock.

- \(\beta_2\) : For a clock with given age, an increase of 1 Bidder is associated with a $85.82 increase in the mean selling price.

Important

Notice that the specific value of age (resp. bidders) does not affect the interpretation of \(\beta_1\) (resp. \(\beta_2\))

Setting Bidders values

Fitted model:

Price = -1336.72 + Age \(\times\) 12.74 + Bidders \(\times\) 85.82

Example 1: when we have 6 bidders Price is calculated:

= -1336.72 + Age \(\times\) 12.74 + 6 \(\times\) 85.82

= -821.8 + Age \(\times\) 12.74

Example 2: when we have 12 bidders Price is calculated:

= -1336.72 + Age \(\times\) 12.74 + 12 \(\times\) 85.82

= -306.88 + Age \(\times\) 12.74

Notice how both lines have the same slope

MLR Visualization (no interaction)

with 2 qualitative predictors

Show the code

library(RColorBrewer)

cf.mod <- coef(ablm)

par(mar = sm.mar)

plot(Price~Age, ylim = c(100, 3000))

ivec <- seq(from = min(Bidders), to = max(Bidders))

icol <- brewer.pal(n = length(ivec), name = "Spectral")

for (i in 1:length(ivec)){

abline(a = cf.mod[1] + cf.mod["Bidders"]*ivec[i], b = cf.mod["Age"], col = icol[i])

}

points(Age, Price)

legend("topleft", legend = ivec, col = icol, ncol = 3, lty = 1, title = "Number of Bidders")

Show the code

A 3D scatterplot of the clock data with the fitted additive MLR model (no interaction) depicted as a plane. This plane is the surface that minimizes the sum of squared residuals

Interaction

Motivation

Suppose that the affect of Age on Price actually depends on how many Bidders there are.

Similarly, the affect of Bidders on Price might depend on the Age of the clock.

We can allow the coefficient of a predictor to vary based on the value(s) of the other predictor(s) by including an additional interaction term1

Interaction Model

With 2 predictors

\[\begin{equation} Y = \beta_0 + \beta_1 X_{1} + \beta_2 X_{2} + \beta_3 X_{1} X_{2} + \epsilon \end{equation}\]

- \(Y\) is the response variable

- \(X_{j}\) is the \(j^{th}\) predictor variable

- \(\beta_0\) is the intercept (unknown)

- \(\beta_j\) are the regression coefficients (unknown)

- \(\epsilon\) is the error, assumed \(\epsilon \sim N(0, \sigma^2)\).

MLR with Interaction

\[\begin{align} Y &= \beta_0 + \beta_1 X_1+ \beta_2 X_2+ \beta_3 X_1 X_2 \end{align}\]

\[\begin{align} \phantom{Y} &= \beta_0 + \beta_2 X_2 + (\beta_1 + \beta_3 X_2) X_1 \end{align}\]

\[\begin{align} \phantom{Y} &= \tilde{\beta_0} + \tilde{\beta_1} X_1 \phantom{+ (\beta_1 + \beta_3 X_2) X_1 } \end{align}\]

where \(\tilde\beta_1 = (\beta_1 + \beta_3 X_2)\) and \(\tilde\beta_0 = (\beta_0 + \beta_2 X_2)\). We can view this as a “new” coefficient for \(X_1\), denoted \(\tilde{\beta_1}\), which is a function of \(X_2\) so that the association between \(X_1\) and \(Y\) is no longer constant, but depends on the value \(x_2\)

Interaction in R

...

Coefficients:

Estimate Std. Error t value Pr(>|t|)

(Intercept) 322.7544 293.3251 1.100 0.28056

Age 0.8733 2.0197 0.432 0.66877

Bidders -93.4099 29.7077 -3.144 0.00392 **

Age:Bidders 1.2979 0.2110 6.150 1.22e-06 ***

Residual standard error: 88.37 on 28 degrees of freedom

Multiple R-squared: 0.9544, Adjusted R-squared: 0.9495

F-statistic: 195.2 on 3 and 28 DF, p-value: < 2.2e-16

...Hierarchical Pricinciple

You will notice that the so-called main effect of the Age predictor does not have a significant \(p\)-value; resist the temptation to remove it from the model!

Hierarchical principal

If the interaction effect is significant you should also keep the main effects even if they have non-significant p-values

Interpretation of Coefficients

Theoretical Model

Price = \(\beta_0\) + \(\beta_1\cdot\)(Age) + \(\beta_2\cdot\)(Bidders) + \(\beta_3\cdot\)(Age \(\cdot\) Bidders)

Fitted Model

Price = \(\hat\beta_0\) + \(\hat\beta_1\cdot\)(Age) + \(\hat\beta_2\cdot\)(Bidders) + \(\hat\beta_3\cdot\)(Age \(\cdot\) Bidders)

\(\phantom{\texttt{Price}}\) = 322.8 + 0.9 (Age) -93.4 (Bidders) + 1.3(Age \(\cdot\) Bidders)

- \(\beta_1\) is the effect of Age when Bidders = 0.

- Since a clock needs to have at least 1 bidder to be sold \(\beta_1\) is meaningless by itself.

So how can we communicate the affect of Age on Price?

Fitted Model with 6 bidders

\[\begin{align} &\texttt{Price} = \hat\beta_0 + \hat\beta_1\texttt{Age} + \hat\beta_2\cdot\texttt{Bidders} + \hat\beta_3\cdot\texttt{Age} \cdot \texttt{Bidders}\\ &= 322.75 + 0.87\cdot\texttt{Age} -93.41\cdot\texttt{Bidders} + 1.3\cdot\texttt{Age} \cdot \texttt{Bidders}\\ &= 322.75 + ( 0.87 + 1.3\cdot \texttt{Bidders})\texttt{Age} -93.41\cdot\texttt{Bidders} \end{align}\]

Notice now the multiplier associated with Age now depends on the value of Bidders.

For example, if Bidders = 6, Price is given:

\[\begin{align} &= 322.75 + (0.87 + 1.3\cdot 6)\texttt{Age} -93.41 (6) \\ &= 322.75 + (8.66)\texttt{Age} -560.46 \\ &= -237.71 + (8.66)\texttt{Age} \end{align}\]

Fitted Model with 12 bidders

\[\begin{align} &\texttt{Price} = \hat\beta_0 + \hat\beta_1\texttt{Age} + \hat\beta_2\cdot\texttt{Bidders} + \hat\beta_3\cdot\texttt{Age} \cdot \texttt{Bidders}\\ &= 322.75 + 0.87\cdot\texttt{Age} -93.41\cdot\texttt{Bidders} + 1.3\cdot\texttt{Age} \cdot \texttt{Bidders}\\ &= 322.75 + ( 0.87 + 1.3\cdot \texttt{Bidders})\texttt{Age} -93.41\cdot\texttt{Bidders} \end{align}\]

Notice now the multiplier associated with Age now depends on the value of Bidders.

Eg. Bidders = 12, Price is given:

\[\begin{align} &= 322.75 + (0.87 + 1.3\cdot 12)\texttt{Age} -93.41 (12) \\ &= 322.75 + (16.45)\texttt{Age} -1120.92 \\ &= -798.16 + (16.45)\texttt{Age} \end{align}\]

Predicted price of 150-year-old clocks

\[\begin{align} &\texttt{Price} = \hat\beta_0 + \hat\beta_1\texttt{Age} + \hat\beta_2\cdot\texttt{Bidders} + \hat\beta_3\cdot\texttt{Age} \cdot \texttt{Bidders}\\ &= 322.75 + 0.87\cdot\texttt{Age} -93.41\cdot\texttt{Bidders} + 1.3\cdot\texttt{Age} \cdot \texttt{Bidders}\\ &= 322.75 + ( 1.3\texttt{Age} -93.41 ) \texttt{Bidders} + 0.87 \cdot \texttt{Age} \end{align}\]

Notice now the multiplier associated with Bidders now depends on the value of Age.

Eg. Age = 150, Price is given:

\[\begin{align*} &= 322.75 + ( 1.3\cdot 150 -93.41 ) \texttt{Bidders} + 0.87 \cdot 150 \\ &= 322.75 + ( 101.27 ) \cdot \texttt{Bidders} + 130.99 \\ &= 453.75 + ( 101.27 ) \cdot \texttt{Bidders} \end{align*}\]

Predicting using R

1

671.666 1

928.8824 1

1162.671 Important

newdata must be a data frame with columns that match the names of your predictors used in the lm() fit. If omitted, the fitted values in your training set will be used. For more information see ?predict.lm

iClicker negative price

What is the main reason these predictions might not be reliable?

1

-32.35781 1

6488.723 - The assumptions are not being met

- Age and Bidders should be the same number

- We are extrapolating outside our observed data range

- The model is underfitting

Hint: it might be useful to revisit the Pairwise scatterplot

Correct answer: C

Show the code

cf.mod <- coef(ilm)

x1.seq <- seq(min(Age),max(Age),length.out=25)

x2.seq <- seq(min(Bidders),max(Bidders),length.out=25)

z <- t(outer(x1.seq, x2.seq, function(x,y) cf.mod[1]+cf.mod[2]*x+cf.mod[3]*y+ cf.mod[4]*x*y))

fig %>% add_surface(x = ~x1.seq, y = ~x2.seq, z = ~z, showscale = FALSE ,

surfacecol = "gray", opacity = 0.5)A 3D scatterplot of the clock data with the fitted MLR model with interaction depicted by a (non-flat) surface.

Interaction Model with set Bidders

Show the code

library(RColorBrewer)

par(mar = sm.mar)

plot(Price~Age, ylim = c(100, 3000))

ivec <- seq(from = min(Bidders), to = max(Bidders))

icol <- brewer.pal(n = length(ivec), name = "Spectral")

for (i in 1:length(ivec)){

abline(a = cf.mod[1] + cf.mod[3]*ivec[i],

b = cf.mod[2] + cf.mod[4]*ivec[i],

col = icol[i])

}

points(Age, Price)

legend("topleft", legend = ivec, col = icol, ncol = 3, lty = 1, title = "Number of Bidders")

Categorical Predictors

So far we’ve assumed that our predictors are continuous valued when we’ve fit a regression.

But there is no real problem if instead we have categorical (i.e. qualitative) values

Let’s motivate this through an example.

Example body

This data set contains the following on 507 individuals:

21 body dimension measurements (eg. wrist and ankle girth)

Age,Weight(in kg),Height(in cm), andGender1 .

You can find this in the gclus library as body.

body data set

Let’s regress Weight on some of these predictors.

Scatterplot Weight vs Height

Incorporating Gender

But we know more, eg. gender.

Question: how do we incorporate categorical variables into this model?

MLR with categorical variables

We need to create dummy variables.

A dummy, or indicator variable takes only the value 0 or 1 to indicate the absence or presence of some categorical effect that may be expected to shift the outcome.

This requires making one of the possible responses of the categorical variable the reference (which means it is assumed true in the base model), and then creating stand-in (dummy) variables for the non-reference options.

It’s best understood through examples…

body MLR Model

The MLR model with 2 predictors will not look any different:

\begin{equation}Y = \beta_0 + \beta_1 X_1 + \beta_2 X_2

\end{equation}

But now, we will consider having mixed data types:

-

Height(numeric) -

Gender(qualitative/categorical)

If a qualitative predictor (also known as a factor) only has two possible values (AKA levels), then incorporating it into a regression model is very simple ….

R syntax (for mixed predictor types)

factors

Using factor() to convert categorical variables to factor (aka a ‘category’ type in R) is extremely important. Failure to do this will result in R treating Gender as a continuous number rather than a category.

Dummy Variables

Under the hood, lm() creates dummy variable for categorical predictors. For a categorical variable with \(k\) levels (aka categories), \(k-1\) dummy variables are created, with the first level being our reference level.

\[ \texttt{Weight} = \beta_0 + \beta_1 \texttt{Height} + \beta_2 \texttt{Male} \]

We now have a dummy variable Male (1 = yes, 0 = no).

If the male, then Male = 1 and our model becomes:

\[ \begin{align} \texttt{Weight} &= \beta_0 + \beta_1 \texttt{Height}+ \beta_2 (1)\\ &= (\beta_0 +\beta_2) + \beta_1 \texttt{Height} \end{align} \]

If female, then Male = 0 and our model becomes:

\[ \begin{align} \texttt{Weight} &= \beta_0 + \beta_1 \texttt{Height}+ \beta_2 (0) \\ & = \beta_0 + \beta_1 \texttt{Height} \end{align} \]

Parallel lines models

\[ \texttt{Weight} = \begin{cases} \beta_0 + \beta_2 + \beta_1 \texttt{Height}, & \text{for males}\\ \ \quad \quad \beta_0 + \beta_1 \texttt{Height}, & \text{for females} \end{cases} \]

This is sometimes referred to as the parallel lines (or parallel slopes) model.

An parallel slopes models include one numeric and one categorical explanatory variable

This model allows for different intercepts but forces a common slope.

Plot of Parallel Slope Model

Show the code

par(mar = c(4, 3.9, 0, 1)) # reduce even more

plot(Weight~Height, col=Gender+1) # 1 = black, 2 = red (3 = green, 4 = blue ...)

legend("topleft", col=c(2,1), pch=1, legend = c("Male", "Female"))

mcoefs <- mlr$coefficients

abline(mcoefs[1]+ mcoefs[3], mcoefs[2], col=2, lwd=2)

abline(mcoefs[1], mcoefs[2], lwd=2)

Parallel lines fit

...

lm(formula = Weight ~ Height + factor(Gender), data = body)

Coefficients:

Estimate Std. Error t value Pr(>|t|)

(Intercept) -56.94949 9.42444 -6.043 2.95e-09 ***

Height 0.71298 0.05707 12.494 < 2e-16 ***

factor(Gender)1 8.36599 1.07296 7.797 3.66e-14 ***

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

Residual standard error: 8.802 on 504 degrees of freedom

Multiple R-squared: 0.5668, Adjusted R-squared: 0.5651

F-statistic: 329.7 on 2 and 504 DF, p-value: < 2.2e-16

...Interpreting the output

This small p-value associated with the “male” dummy variable indicates that, after controlling for height, there is strong statistical evidence to suggest a difference in average weight based on gender.

It is estimated that males will tend to be 8.37 kg heavier than females of the same height.

N.B. The level selected as the baseline category is arbitrary, and the final predictions for each group will be the same regardless of this choice

Aside: factor levels

We can re-level and name our Gender to get…

body2 <- body

body2$Gender = factor(body$Gender, levels=c(1,0), labels = c("Male", "Female"))

summary(lm(Weight ~ Height + Gender, data=body2))...

Coefficients:

Estimate Std. Error t value Pr(>|t|)

(Intercept) -48.58350 10.15868 -4.782 2.28e-06 ***

Height 0.71298 0.05707 12.494 < 2e-16 ***

GenderFemale -8.36599 1.07296 -7.797 3.66e-14 ***

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

Residual standard error: 8.802 on 504 degrees of freedom

Multiple R-squared: 0.5668, Adjusted R-squared: 0.5651

F-statistic: 329.7 on 2 and 504 DF, p-value: < 2.2e-16

...Overkill?

- This may see like overkill seeing as how

Genderwas already coded up as 0 for female and 1 for male. - However, imagine if

Genderinstead was grouped as: male, female or other. - In this case, we would need pick a reference level and create two 1 dummy variables to represent other levels for this categorical variable.

Gender with >2 Levels

Choosing Female1 as our reference level requires us to make a dummy variable for Male and a dummy variable for Other.

\[ \texttt{Weight} = \beta_0 + \beta_1 \texttt{Height} + \beta_2 \texttt{Male} + \beta_3 \texttt{Other} \]

We now have two dummy variables

\[ \texttt{Male} = \begin{cases} 1& \text{if male}\\ 0& \text{otherwise} \end{cases} \]

\[ \texttt{Other} = \begin{cases} 1& \text{if Other}\\ 0& \text{otherwise} \end{cases} \]

When the individual identifies as a male we have Male = 1, Other = 0 and so our model becomes

\[ \texttt{Weight} = \beta_0 + \beta_2 + \beta_1 \texttt{Height} \]

When the individual identifies as a female (reference level) we have Male = 0, Other = 0 and so our model becomes

\[ \texttt{Weight} = \beta_0 + \beta_1 \texttt{Height} \]

When the individual identifies as Other we have Male = 0, Other = 1 and so our model becomes

\[ \texttt{Weight} = \beta_0 + \beta_3 + \beta_1 \texttt{Height} \]

Parallel Lines with >2 Levels

As before, we have parallel lines1 (one line for each level in our Gender factor)

\[ \texttt{Weight} = \begin{cases} \beta_0 + \beta_2 + \beta_1 \texttt{Height} , & \text{for males}\\ \beta_0 + \beta_3 + \beta_1 \texttt{Height} , & \text{for other}\\ \quad \quad \ \beta_0 + \beta_1 \texttt{Height}, & \text{for females} \end{cases} \]

\(\beta_2\) describes the shift from Females (our reference) to Males

\(\beta_3\) describes the shift from Females (our reference) to Other

Interaction with Mixed Predictors

We can include interactions with mixed-type predictors as well.

\[ \begin{align*} \texttt{Weight} &= \beta_0 + \beta_1\texttt{Height} + \beta_2\texttt{Male} + \beta_3(\texttt{Height}\times\texttt{Male}) \end{align*} \]

Now for males (Male =1) we have:

\[ \begin{align*} \text{Weight} &= \beta_0 + \beta_1(\text{Height}) + \beta_2(1) \\&+ \beta_3(\text{Height}\times 1) \\ &= (\beta_0 + \beta_2) + (\beta_1 + \beta_3) (\text{Height}) \end{align*} \]

For female (Male = 0) we have:

\[ \begin{align*}\text{Weight} &= \beta_0 + \beta_1(\text{Height}) + \beta_2(0) \\&+ \beta_3(\text{Height}\times 0) \\ &= \beta_0 + \beta_1\times \text{Height} \end{align*} \]

We see that they have different intercepts and slopes.

Non-parallel lines

Show the code

# store coeffiecents

icoefs <- intlm$coefficients

# Plot -------

par(mar = c(4.9, 3.9, 1, 1))

plot(Weight~Height, col=Gender+1)

legend("topleft",col=c(2,1),pch=1,

legend=c("Male","Female"))

# plot the line for males

abline(a = icoefs[1]+ icoefs[3],

b = icoefs[2] + icoefs[4],

col=2, lwd=2)

# plot the line for female

abline(a = icoefs[1],

b= icoefs[2], lwd=2)

Interaction Model: Height*Gender

...

Coefficients:

Estimate Std. Error t value Pr(>|t|)

(Intercept) -43.81929 13.77877 -3.180 0.00156 **

Height 0.63334 0.08351 7.584 1.63e-13 ***

Gender -17.13407 19.56250 -0.876 0.38152

Height:Gender 0.14923 0.11431 1.305 0.19233

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

Residual standard error: 8.795 on 503 degrees of freedom

Multiple R-squared: 0.5682, Adjusted R-squared: 0.5657

F-statistic: 220.7 on 3 and 503 DF, p-value: < 2.2e-16

...Issues to look out for

- Non-linear relationships

- Correlation of error terms

- Non-constant variance of error terms

- Outliers/Influential observations (high-leverage points)

- Collinearity of predictors

Non-linearity Examples

Suppose we have a case where the response has a non-linear relationship with the predictor(s).

For example, what if there’s a quadratic relationship?

We can extend the linear model in a very simple way to accommodate non-linear relationships, using polynomial regression.

Polynomial Regression

Easy. Fit a model of the form \[Y = \beta_0 + \beta_1 X + \beta_2 X^2 + \epsilon\]

Basically, if we square (or otherwise transform) the original predictor, we can still fit a “linear” model for the response.

In line with the hierarchical principle, if you keep \(X^2\) in your model, you should also keep \(X\).

Though it’s important to keep in mind the change in interpretation for \(\beta_1\) and \(\beta_2\), for example

Higher Order Polynomials

Polynomial regression can extend to to higher order terms, e.g. quadratic terms (\(X^2\)), cubic terms (\(X^3\)), and so on: \[Y = \beta_0 + \beta_1 X + \beta_2 X^2 + \beta_3 X^3 + \dots + \epsilon\]

This is still considered a linear regression model because it is linear with respect to the coefficients (\(\beta_0\), \(\beta_1\), \(\beta_2\), \(\beta_3\),…).

While polynomial regression is a linear model w.r.t the model parameters, it can capture nonlinear relationships between the independent and dependent variables by introducing polynomial terms.

Example: Quadratic Simulation

We simulate 30 values from the following model where \(\epsilon\) is standard normally distributed. \[Y=15+2.3x-1.5x^2+\epsilon\]

Example: SLR fit

...

Call:

lm(formula = y ~ x)

Coefficients:

Estimate Std. Error t value Pr(>|t|)

(Intercept) 13.3206 0.3592 37.081 < 2e-16 ***

x 1.7866 0.3248 5.501 7.06e-06 ***

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

Residual standard error: 1.967 on 28 degrees of freedom

Multiple R-squared: 0.5194, Adjusted R-squared: 0.5023

F-statistic: 30.26 on 1 and 28 DF, p-value: 7.059e-06

......

Call:

lm(formula = y ~ x + I(x^2))

Coefficients:

Estimate Std. Error t value Pr(>|t|)

(Intercept) 15.3556 0.2509 61.20 < 2e-16 ***

x 2.2773 0.1529 14.89 1.54e-14 ***

I(x^2) -1.6702 0.1578 -10.59 4.14e-11 ***

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

Residual standard error: 0.8829 on 27 degrees of freedom

Multiple R-squared: 0.9067, Adjusted R-squared: 0.8998

F-statistic: 131.2 on 2 and 27 DF, p-value: 1.244e-14

...We can use the diagnostic plots as used last class to check fit.

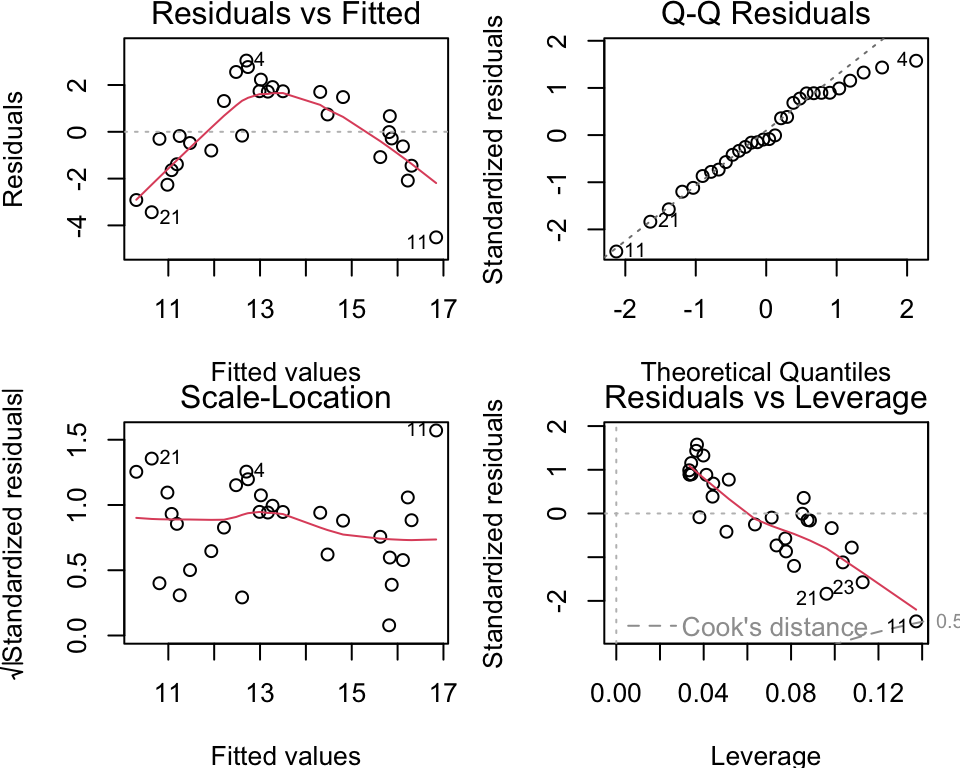

Diagnostic Plots

Residuals vs Fitted: checks for linearity. A “good” plot will have a red horizontal line, without distinct patterns.

Normal Q-Q: checks if residuals are normally distributed. It’s “good” if points follow the straight dashed line.

Scale-Location: checks the equal variance of the residuals. “Good” to see horizontal line with equally spread points.

Residuals vs Leverage: identifies influential points1.

Example: SLR Residuals

Example: Quadratic Residuals

Exemplary Residual Plot

Parametric models

A linear regression model is a type of parametric model; that is

lm()assumed a linear functional form for \(f(X)\).Parametric models make explicit assumptions about the functional form or distribution of the data.

Parametric models are characterized by a fixed number of parameters that need to be estimated from the data.

Non parametric models

Non-parametric models do not assume a specific functional form for the data.

They are more flexible and do not require a fixed number of parameters.

Instead of assuming a specific distribution or functional form, non-parametric models rely on the data itself to determine the model’s complexity.

K-nearest neighbors (KNN) regression, is one such example.

KNN Regression

Given positive integer \(K\) (chosen by user) and observation \(x_0\):

- Identify the \(K\) closest points to \(x_0\) in training data. Call this set \(N_0\)

- Estimate \(f(x_0)\) using \[\begin{equation} \hat f(x_0) = \frac{1}{K} \sum_{i \in N_0} y_i \end{equation}\]

See Ch 7 of Campbell, T., Timbers, T., Lee, M. (2022). Data Science: A First Introduction. United States: CRC Press.

K = 20

Visualization 1

Visualization 2

Animation

K = 5

K = 5 Visualization

K = 1

Conclusion

Small values of \(K\) are more flexible

- produce low bias but high variance

- At \(K=1\) the prediction in a given region is entirely dependent on just one observation.

In contrast, large values of \(K\) are “smoother” with less steps

- less variance (changing one observation has a smaller effect)

- more bias (smoothing masks some of the structure in \(f(X)\))

Again we see the bias-variance trade off in action!